Bridging Research and Industry in AI

I design, lead, and build AI solutions—spanning both research and production—from hands-on coding to team leadership and academic publishing. My current focus is on Responsible AI and Generative AI.

I design, lead, and build AI solutions—spanning both research and production—from hands-on coding to team leadership and academic publishing. My current focus is on Responsible AI and Generative AI.

Over 7 years in academia—culminating in a tenure-track position at the Dutch National Center for Math & CS—shaped my ability to think critically and creatively about complex problems. The past years in senior industry roles have expanded that foundation, from building production-grade ML systems to leading teams and guiding strategy. Today, I thrive at the intersection of research and application, bridging cutting-edge innovation with real-world implementation. From time to time, I still provide academic service.

InSilicoTrials Technologies (NL)

INGKA Digital [IKEA] (NL)

Dutch National Center of Math & Computer Science [CWI] (NL)

TU Chalmers (SE)

Dutch National Center of Math & CS + TU Delft (NL)

National Math & CS Center + TU Delft, NL

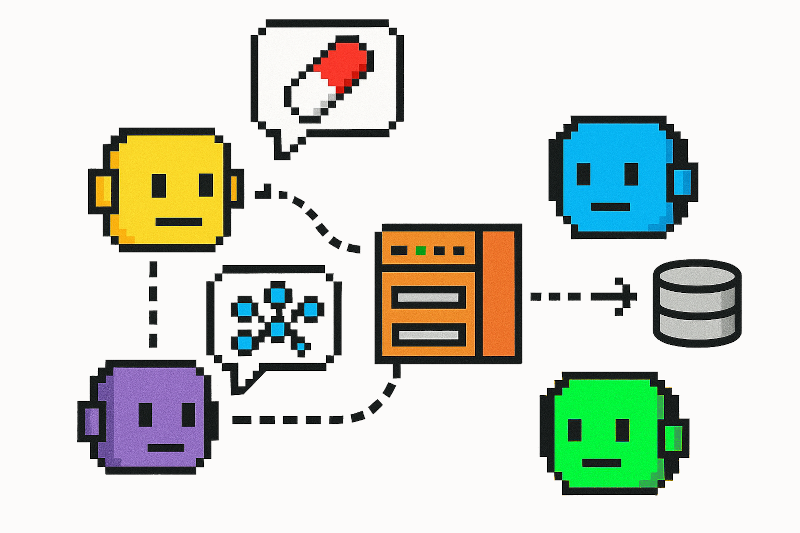

Led the development and contributed hands-on to several LLM-based systems, including a multi-agent one featuring a Model Context Protocol (MCP) server serving specialized tools.

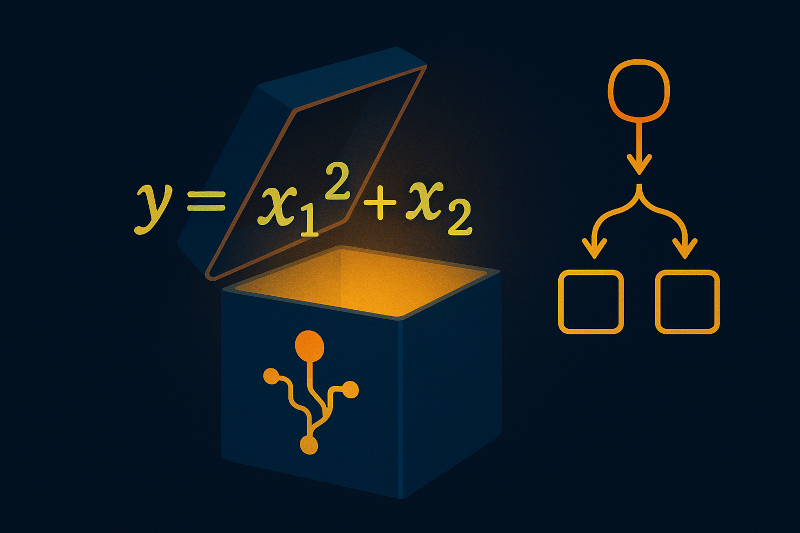

Published and developed algorithms to discover models as human-interpretable mathematical equations (symbolic regression), or explain black-box models with counterfactual explanations.

Worked on responsible AI, with e.g. a contribution to FDA's guidance proposal on the use of AI for drugs, and the development of a technical protocol for quantifying the level of fidelity, utility, and privacy of synthetic data.

Interested in having a chat or collaborating? Feel free to reach out through any of these channels or fill the form below.